Shilong Liu Homepage

Hi! This is Shilong Liu (刘世隆). I am a Postdoctoral Research Fellow at the Princeton AI Lab, Princeton University, working with Prof. Mengdi Wang. I obtained my Ph.D. from the Department of Computer Science and Technology, Tsinghua University, under the supervision of Prof. Lei Zhang, Prof. Hang Su, and Prof. Jun Zhu. I received my B.Eng. degree from the Department of Industrial Engineering, Tsinghua University, in 2020.

Before joining Princeton, I was a Research Scientist at Bytedance Seed.

During my Ph.D. and research career, I have had the privilege to intern and collaborate at leading research labs, including Bytedance, NVIDIA Research, Microsoft Research Redmond, IDEA Research, and Shengshu Tech, working with amazing mentors such as Guilin Liu, Zhiding Yu, Chunyuan Li, Hao Cheng, and Jianwei Yang.

🎯 Research Interests

My research lies at the intersection of LLM Agents, Multimodal Learning, Computer Vision, and Physical Intelligence. I am broadly interested in building vision-language-action systems that can see, reason, and act in open environments.

🚀 Work With Me

Looking for collaborations and self-motivated interns excited about agent and multimodal AI research. Contact me with my email: slongliu86@gmail.com and sl8264@princeton.edu.

🔬 Representative Works

-

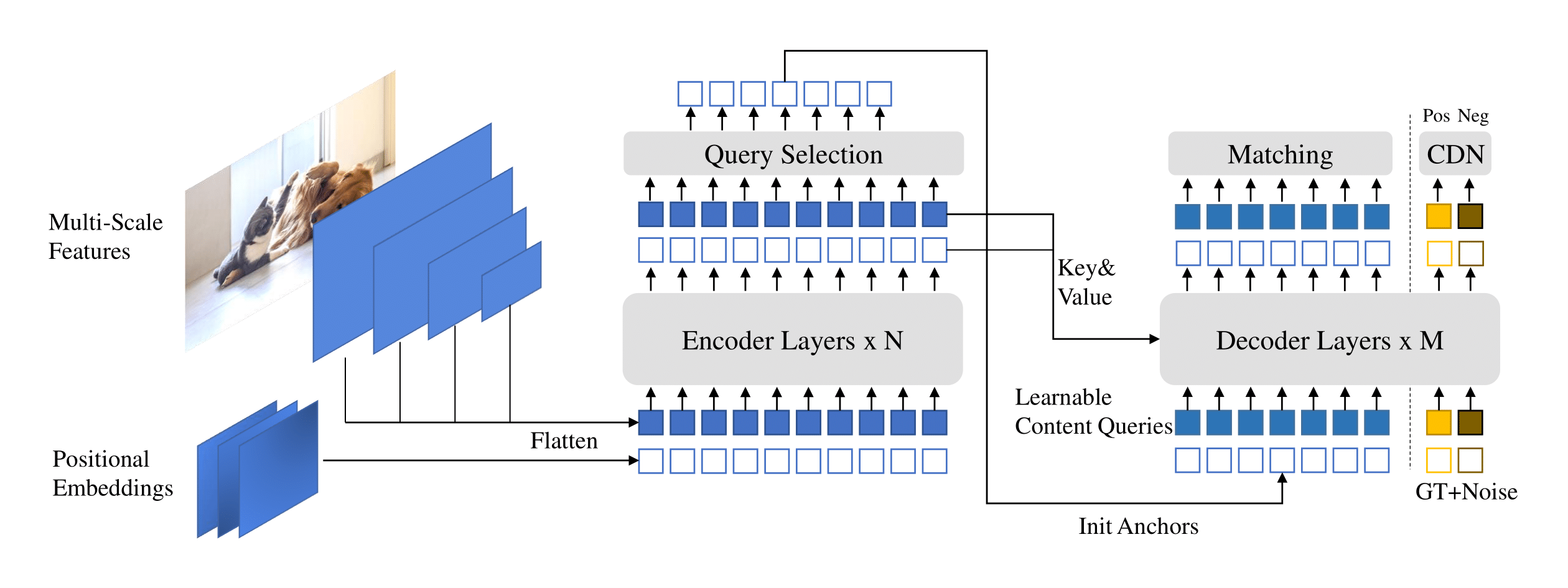

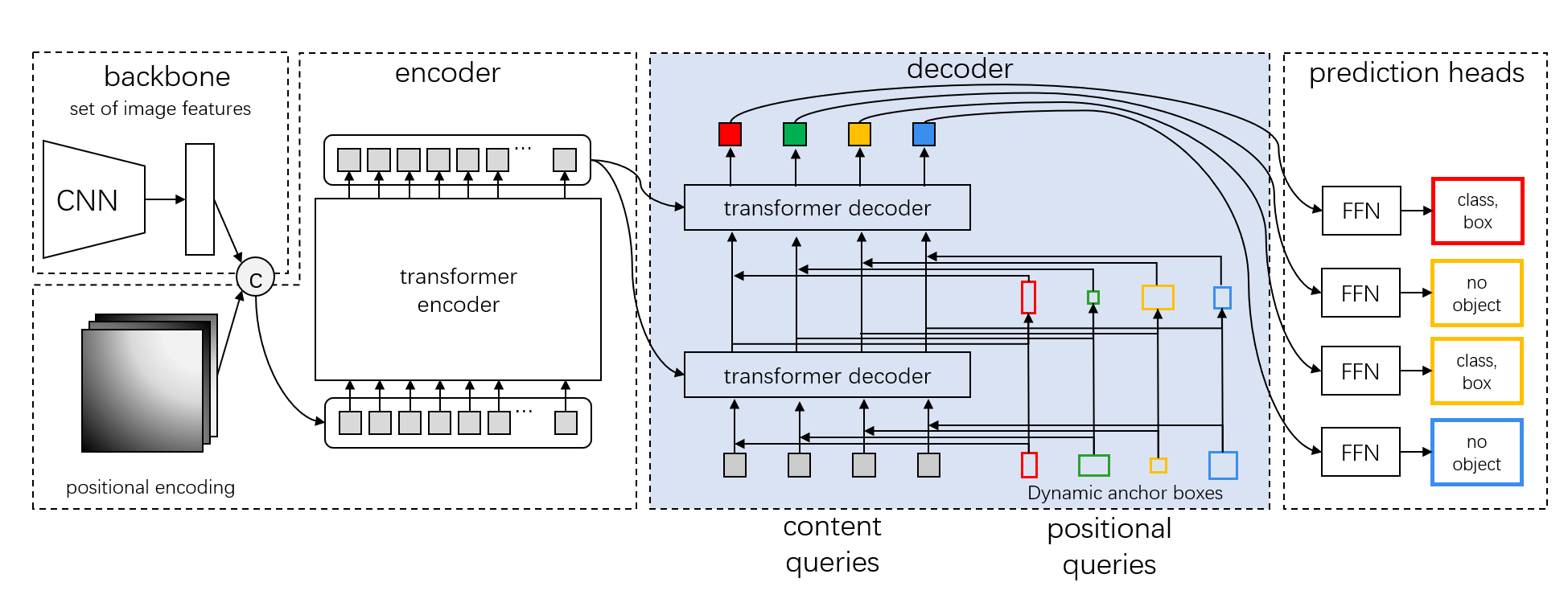

Visual Perception & DETR Evolution

We introduced a series of Transformer-based detection models including DAB-DETR, DN-DETR

, DINO

, MaskDINO

, and Stable-DINO

. DINO was the first DETR-like model to achieve state-of-the-art performance on the COCO object detection leaderboard.

-

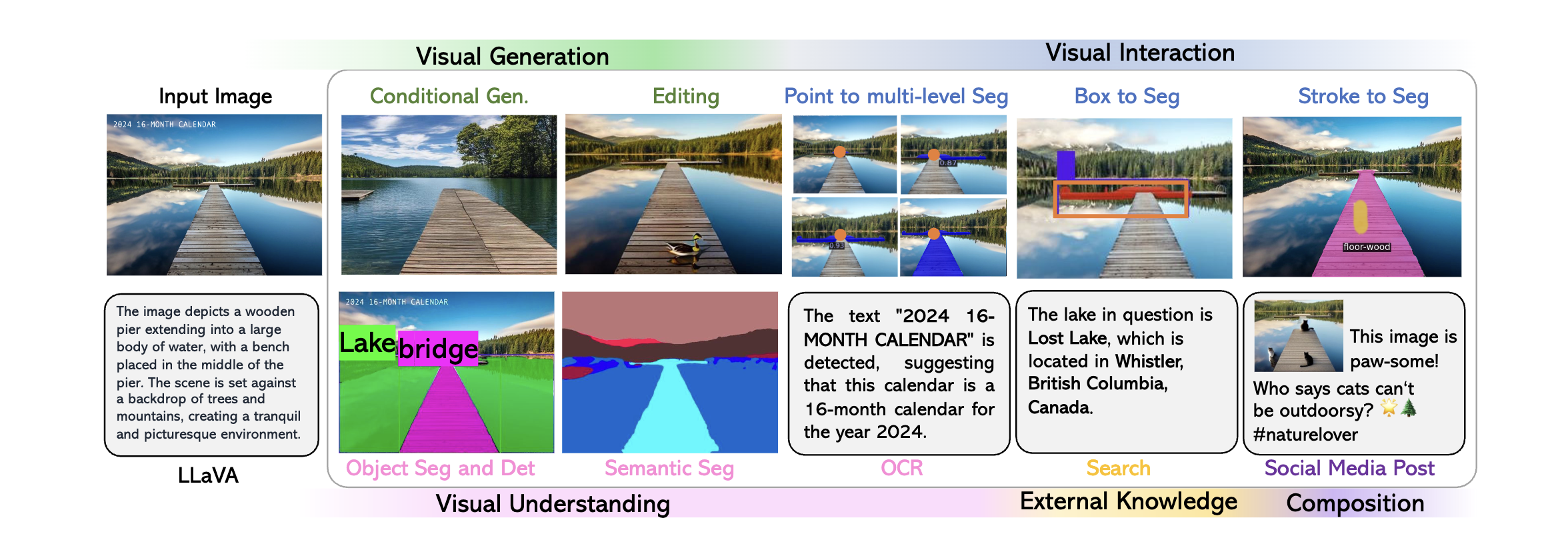

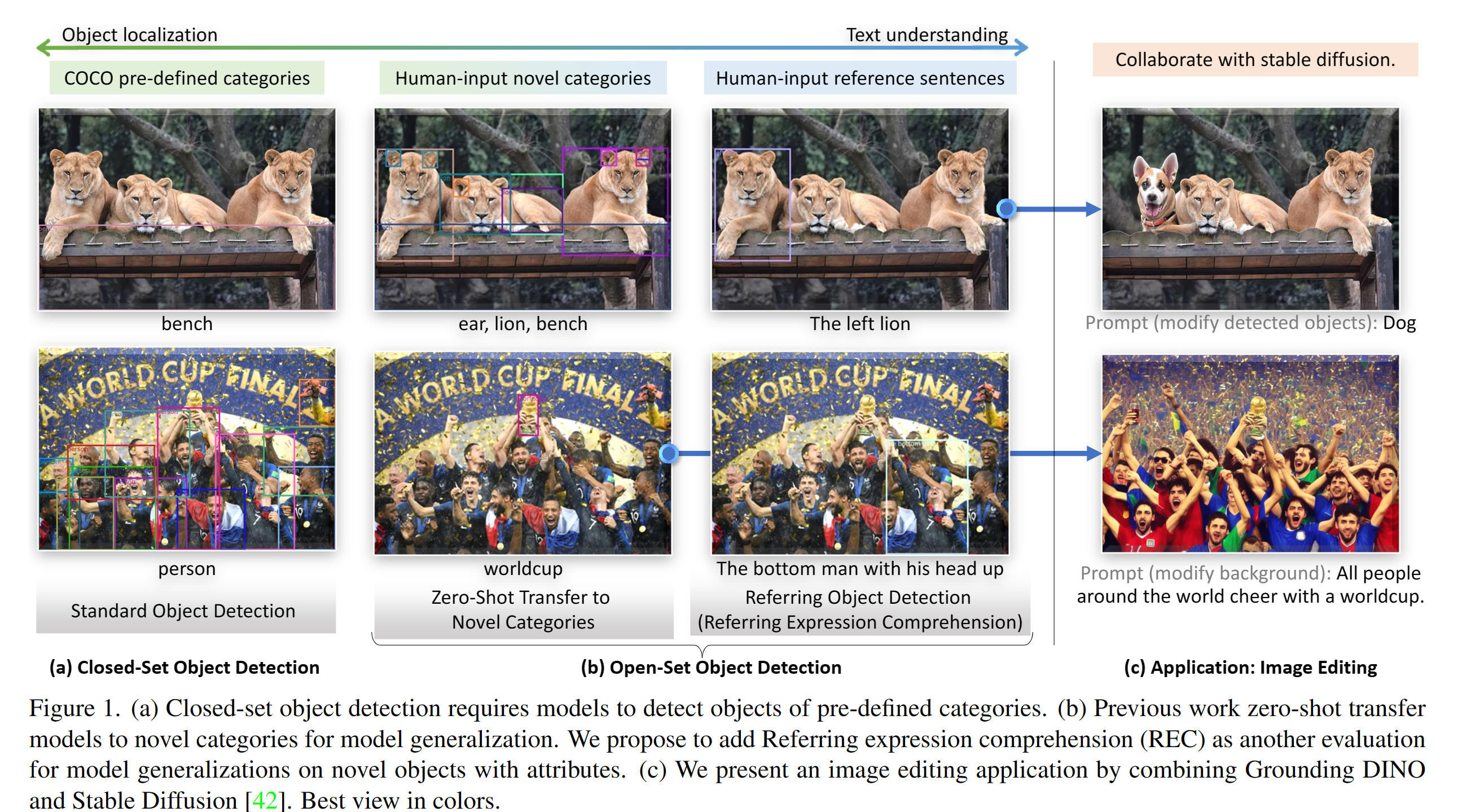

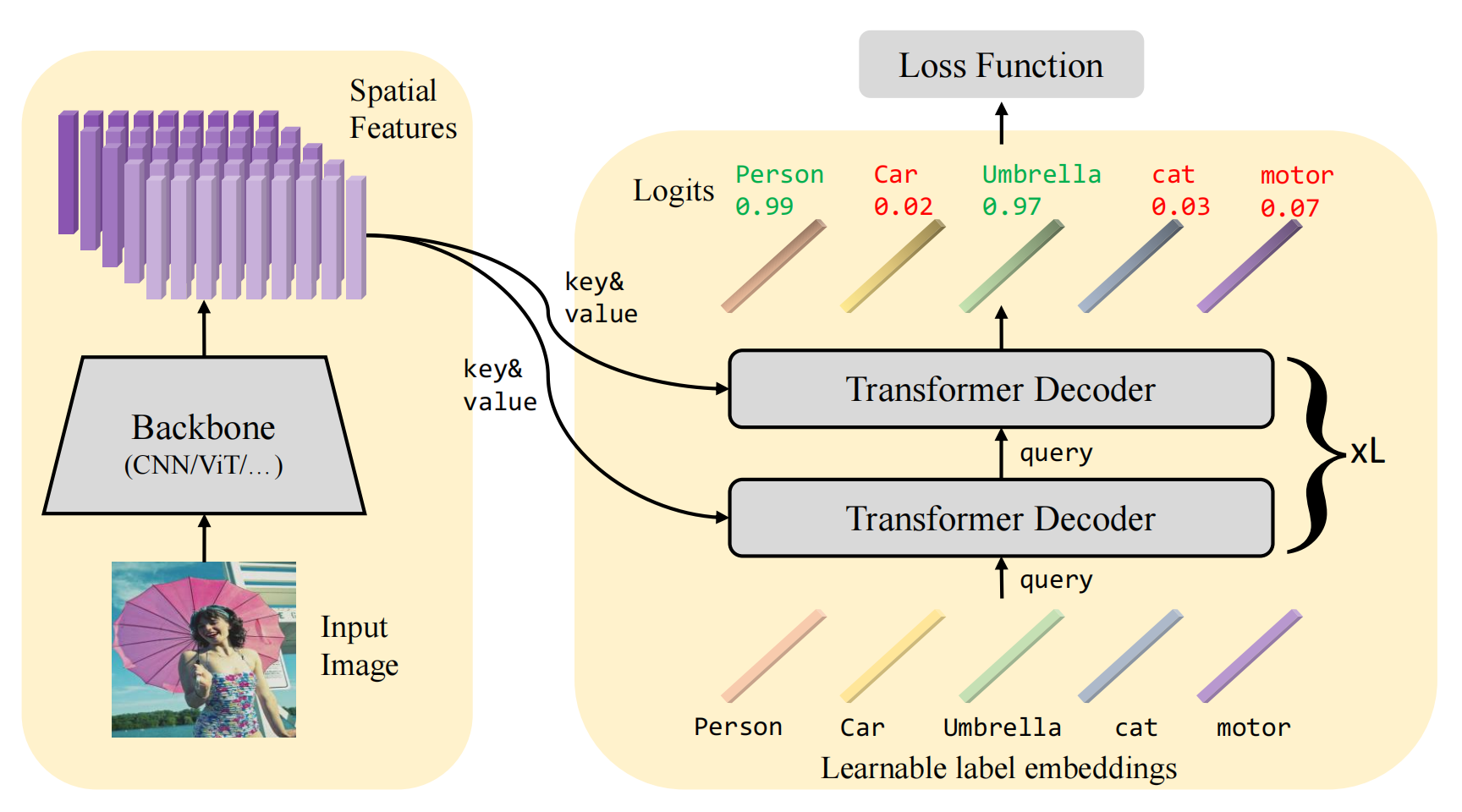

Open-world Visual Understanding & Multimodal Models

We developed Grounding DINOand Grounded-SAM

, empowering models to detect and segment anything. Grounding DINO is now the most downloaded zero-shot object detection model on Hugging Face and receives over 2 million downloads per month. The subsequent series—Grounding-DINO-1.5, 1.6, and DINO-X—continues to push open-world perception forward.

-

LLM Agents & Generalist Intelligence

We proposed Alita, a generalist agent that ranked 1st on the GAIA benchmark, surpassing OpenAI Deep Research. We also introduced LLaVA-Plus, enhancing multimodal large language models with vision-expert tool usage, and Crab, a Python-based framework for agent environment simulation and benchmarking. Our recent work extends agents into specialized domains such as medical reasoning and history cognition.

🏆 Awards & Recognitions

- WAIC Yunfan Award – Rising Star, 2024 (15 people/year)

- KAUST AI Rising Star, 2024 (Top 15%)

- CCF-CV Academic Emerging Scholar Award, 2023 (3 people/year)

- Innovation 84 Scholarship, 2024.

📈 Impact

- 11,000+ Google Scholar citations

- 30,000+ GitHub stars

- 4 papers selected as Top 15 Most Influential Papers by Paper Digest

If you are interested in multimodal agents or open-world vision models, feel free to reach out at:

📧 slongliu86 [AT] gmail.com or sl8264 [AT] princeton.edu

(Note: the Tsinghua email is deprecated; please use Gmail or Princeton email instead.)

Feel free to add me on WeChat: SLONG_88 (please include a short self-introduction).

| Google Scholar | GitHub | Zhihu 知乎 | CV (Google Drive) |

News

| Nov 11, 2024 | Invited talk at EECS 542, University of Michigan. [Slides] |

|---|---|

| Jul 22, 2024 | Start my internship at NVIDIA, collabrating with Guilin Liu and Zhiding Yu. See you at the Bay Area, USA. |

| Jul 4, 2024 | I was awarded as the 2024 WAIC Yunfan Award – Rising Star. [News] |

| Jul 3, 2024 | 6 papers are accepted by ECCV 2024. See their details: |

| May 17, 2024 | We introduce Grounding DINO 1.5, which is our most powerful open-world object detection model series. View our blog and tech report for more details. Try our demo. |

| Feb 19, 2024 | Invited talk at Rising Stars in AI Symposium 2024 at KAUST. I really enjoy the trip. |

| Dec 1, 2023 | DINO and DAB-DETR are awarded as the most influential papers for ICLR 2023 and ICLR 2022, respectively. Mask DINO is selected as one of the most influential paper for CVPR 2023. |

| Nov 5, 2023 | I was awarded as the CCF-CV Academic Emerging Scholar 2023 (CCF-CV 学术新锐学者, 3 people per year)! Thanks to the China Computer Federation. |

| Sep 29, 2023 | Invited talks at Institute for Al Industry Research (AIR), Tsinghua University, HPC-AI Lab National University of Singapore, Gaoling School of Artificial Intelligence at Renmin University of China (RUC), and VALSE Student Webinar. View the slides here (Slides about detection, grounding, and large language models) |

| Mar 13, 2023 | We release a strong open-set object detection model Grounding DINO that achieves the best results on open-set object detection tasks. It achieves 52.5 zero-shot AP on COCO detection, without any COCO training data! It achieves 63.0 AP on COCO after fine-tuning. Code and checkpoints will be available here. |

| Sep 22, 2022 | We release a toolbox detrex that provides state-of-the-art Transformer-based detection algorithms. It includes DINO with better performance. Welcome to use it! |